Belén Saldías (CCC, PhD candidate) and Cassandra Lee (CCC, Masters candidate) are featured in New Realities. Stories of Art, AI & Work, an exhibition at the Museum for Communication Berlin.

They were invited to write about Generative AI and pathways for new ways of communication by Dr. Annabelle Hornung, Director at the Museum of Communication Nuremberg, who attended their workshop at the Ars Electronica Festival 2023 in Linz.

The exhibition is on view from April 26 to September 15, 2024.

Learn more about New Realities. Stories of Art, AI, and Work here: https://new-realities.museumsstiftung.de/

Belén and Cassandra’s full contributions to the exhibition are below:

Belén Saldías, Human-Centered AI engineer and researcher at MIT

Some areas my research and work have focused on in the last few years are agency and transparency in AI. What does transparency mean in AI decision-making? Do we even all agree on what transparency in human decision-making means? Do we know where someone is coming from when making a decision? Can we inquire for more information to complete our understanding of when someone else is making a decision? Is the design and deployment of AI in the hands of a few or the hands of the many?

When I think about transparency in terms of AI, these questions above also come to mind. For AI, transparency has to do with at least a few stages, including where these models are learning from, data sources and content, how this data is processed and handled, the decisions made by engineers and developers who train, evaluate, and deploy these AI models and systems, who is using these systems and for what purposes, what users’ backgrounds are in AI, and who governs these systems.

In each stage, there is a lot of room for information and visibility to be lost, making these AI systems more and more unintelligible. Further, even with the highest levels of description and interpretability methods, there is still room to misunderstand what the creator wanted to say versus what the user is understanding by such explanation, and even more dangerously so, room to confuse correlation with causality and fail to explain the reasoning behind these AIs.

Unlike other fields where you have standards of communicating potential side effects or when something is ready to be released to the market and trust the entities developing these technologies, AI lacks accountability in these regards. On the one hand, an elevator only needs a little transparency for the users; we’ve come to trust how it works, and there are mechanisms to attend to any emergency should it happen. Further, we trust the FDA process and trials to release drugs and aliment products that are readily available for us. Models are being released too soon. On the other hand, we are massively releasing AI models to the world without even understanding their potential impact and unfair biases.

Who are we trying to make the models transparent for? Who is accountable for that transparency? Who should care about transparency and accountability? How much trust is there involved in the process of transparency and accountability?

While many people unquestioningly trust these models as if they are magic, there are those of us who wonder when we will feel comfortable with anyone deploying and using our generative AI systems at scale.

My hope is that this big wave of easily accessible AIs will allow more people to use them and challenge them in the same way we can reverse-engineer systems; people don’t need to become AI experts to try to reverse-engineer parts of these generative AIs.

Further, the challenge with understanding the core of how these AI models work is not only that they are prohibitively expensive to train but also that companies who release them are making money much faster than they are being criticized; so the speed at which these models scale their flaws perpetuates and enlarges the disadvantages when they fail.

I’ll finish with this reflection. Imagine you meet a new person; this person seems amusing and can do so many things! Imagine you get to know them better and realize that they are as flawed as we are all; some of their values match your own, and some don’t, but you don’t realize that because you don’t engage in these conversations yet. Now imagine this becoming the template person and that, based on how marvelous they seemed at the beginning, you replicated this human millions of times, and this person is now making decisions about who gets accepted in what school, decisions about who get a job, what learning content you offer to children, or what job opportunity you get displayed online. Their biases and values are now spreading at a scale that can be uncontrollable, and people can be discriminated against in ways we did not even imagine–and in ways it’s been very well researched these AIs fail at.

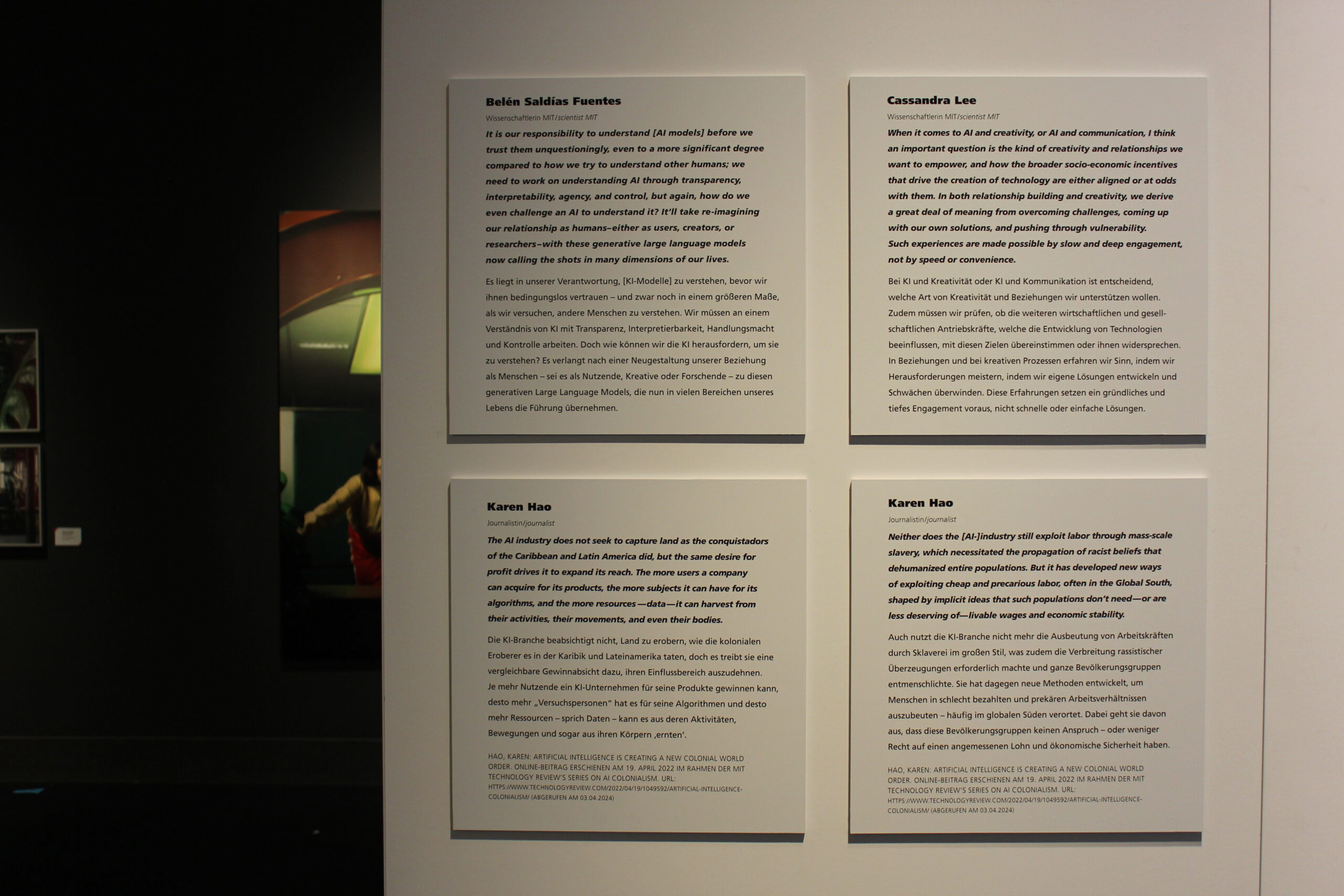

Replace this template human now with a generative AI model; how many people interacting with them really understand the values encoded in these AIs? The fact that they are fluent–which they’ve been trained on–doesn’t mean they are flawless. It is our responsibility to understand them before we trust them unquestioningly, even to a more significant degree compared to how we try to understand other humans; we need to work on understanding AI through transparency, interpretability, agency, and control, but again, how do we even challenge an AI to understand it? It’ll take re-imagining our relationship as humans–either as users, creators, or researchers–with these generative large language models now calling the shots in many dimensions of our lives.

Cassandra Lee, designer and researcher at MIT

When it comes to AI and creativity, or AI and communication, I think an important question is the kind of creativity and relationships we want to empower, and how the broader socio-economic incentives that drive the creation of technology are either aligned or at odds with them. In both relationship building and creativity, we derive a great deal of meaning from overcoming challenges, coming up with our own solutions, and pushing through vulnerability. Such experiences are made possible by slow and deep engagement, not by speed or convenience. As an entire industry, the tech sector tends to produce goods and services built around the philosophy of convenience, and sell users on the promise of avoiding failure, vulnerabilities, and discomfort, all of which are foundational to meaningful creative practice. AI is a product of this same industry, and it’s not quite clear how designers can create supportive infrastructure around AI that empowers critical thinking, thoughtful human agency, and acceptance of slowness and restraint. Many of my favorite AI-based projects demonstrate that it is possible, but I am skeptical of the broader societal pressures that place significant value on efficiency, and if there are sufficient incentives for designers and developers to create AI systems which align with principles of high-quality creativity.

I am most excited by generative systems which empower collaborative creativity through language. Our words, expressed through writing or conversation, are a unifying form of expression. That we can so easily use our words in this medium demonstrates a profound shift in how we act creativity and engage with one another. With AI art in particular, multiple people can use their words to contribute to the same image in an iterative, participatory, process. This makes me think seriously about group dialogue as a form of art making. In a world where communicating feels so difficult, I’m excited by the possibility that AI enables us to use our words to gather around art marking.